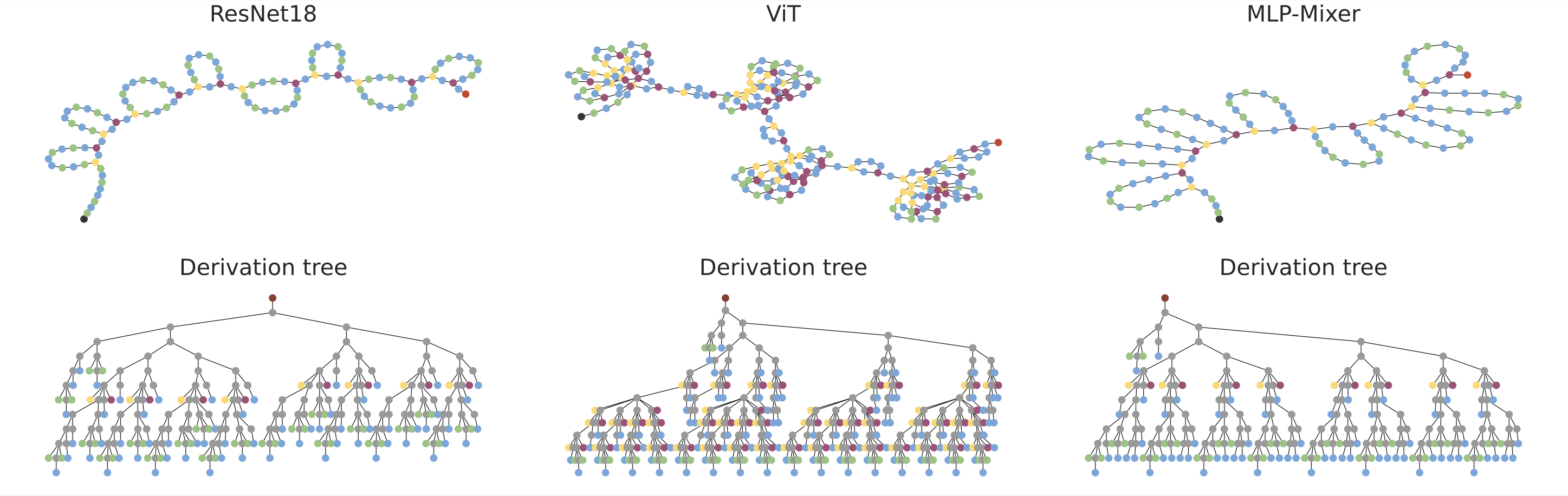

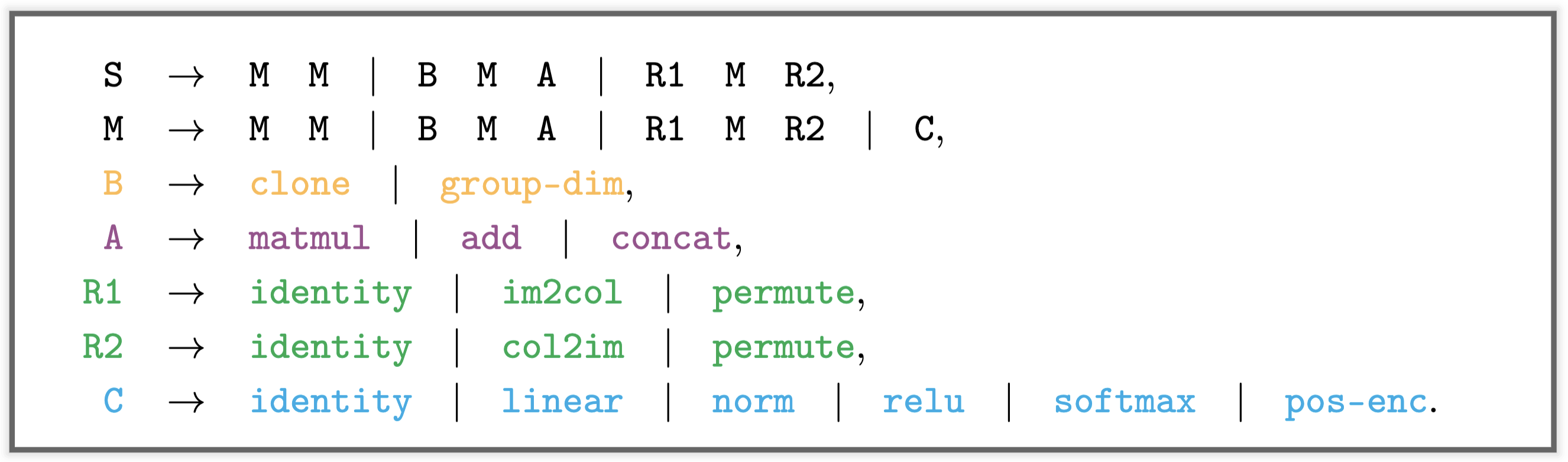

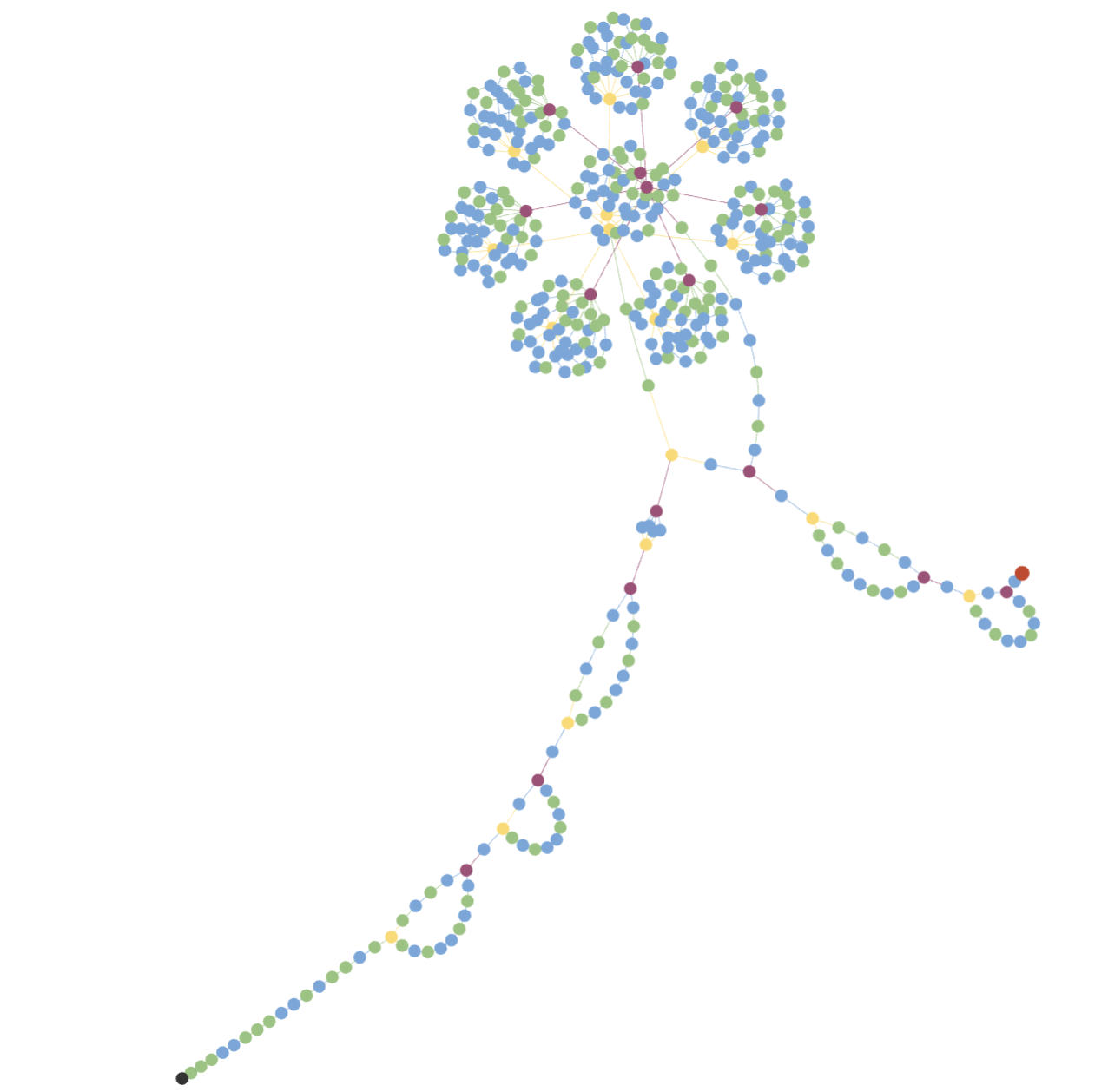

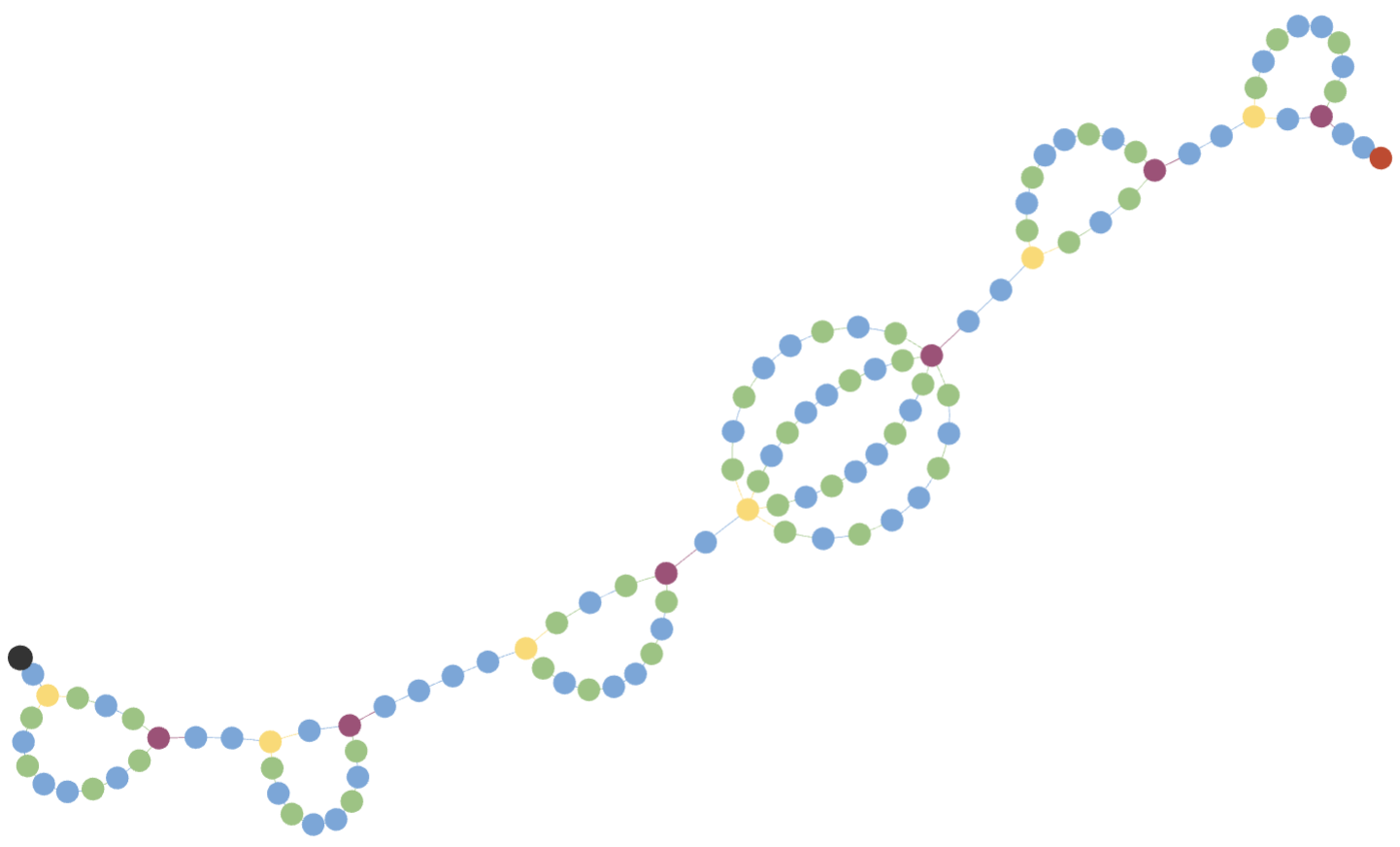

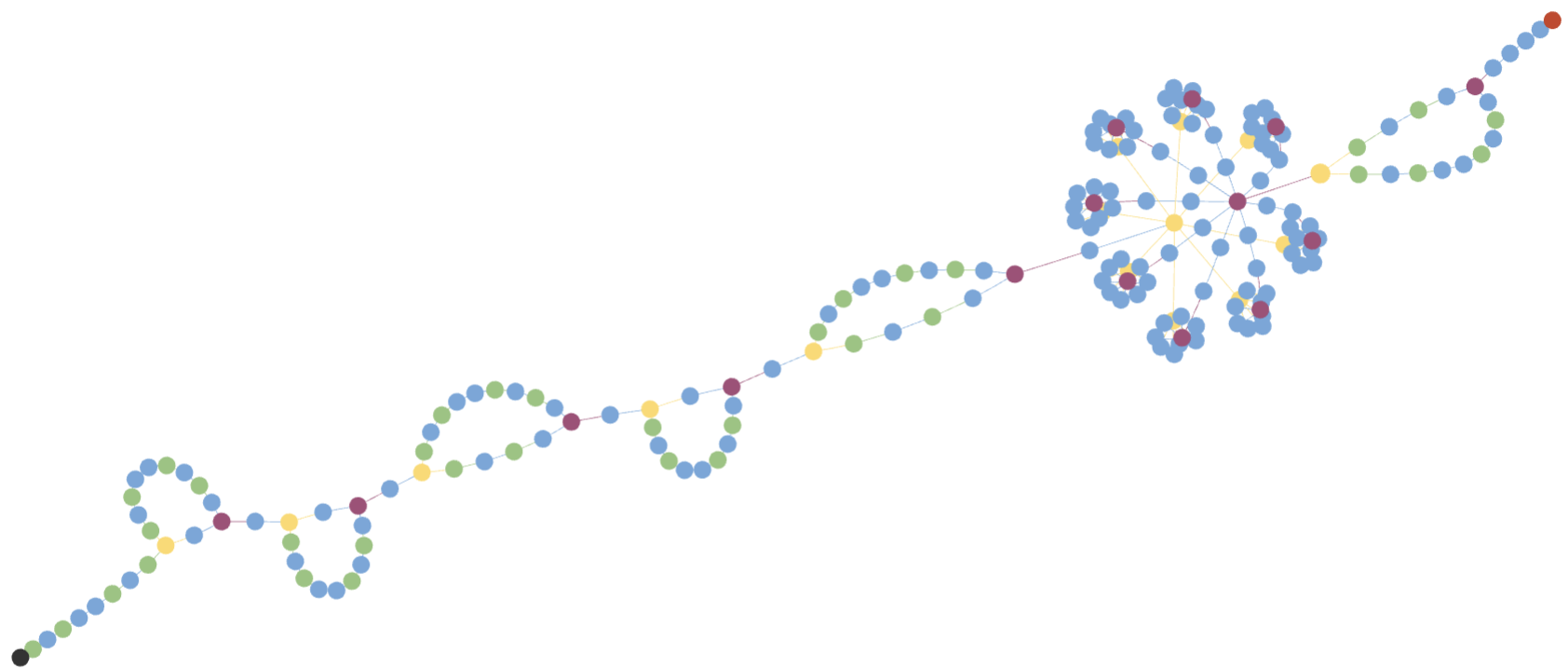

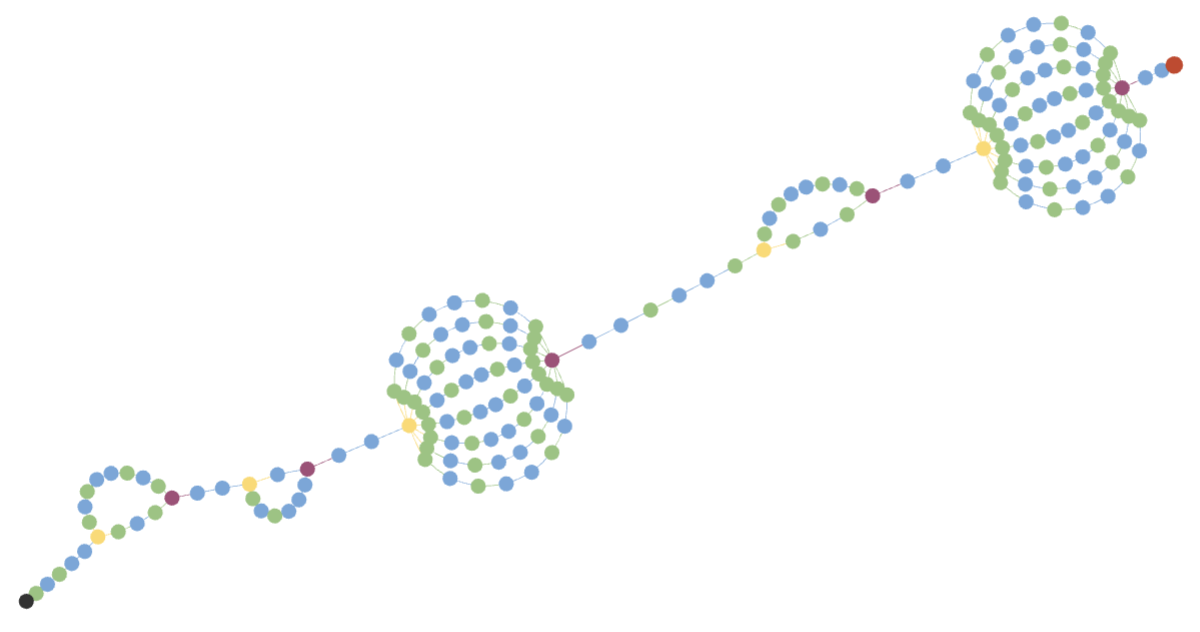

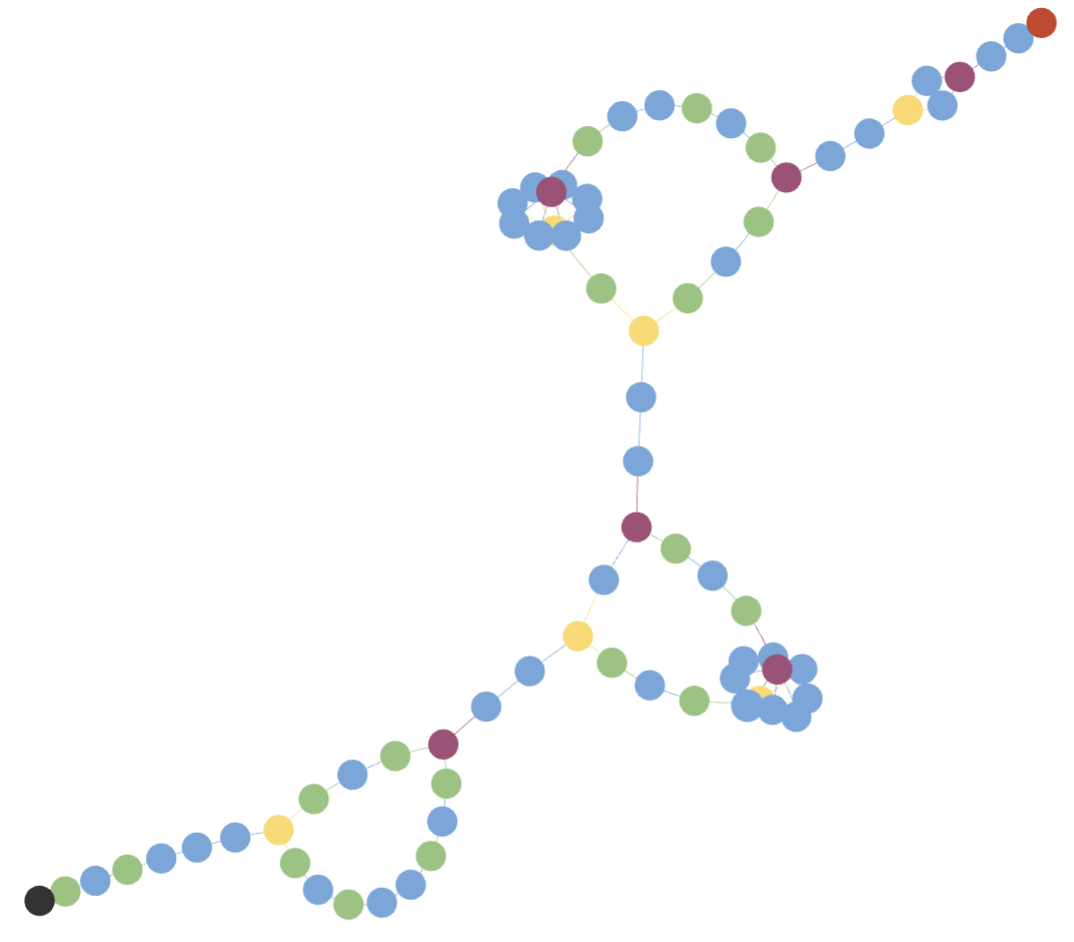

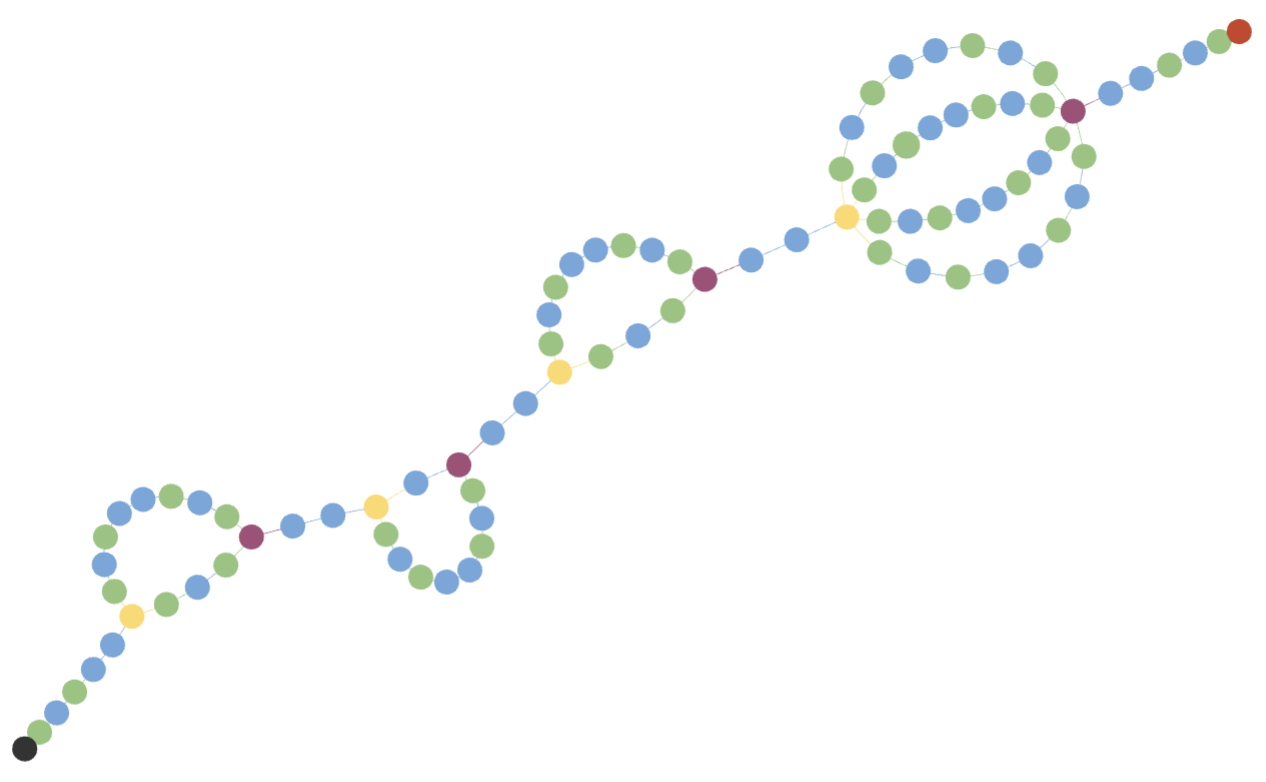

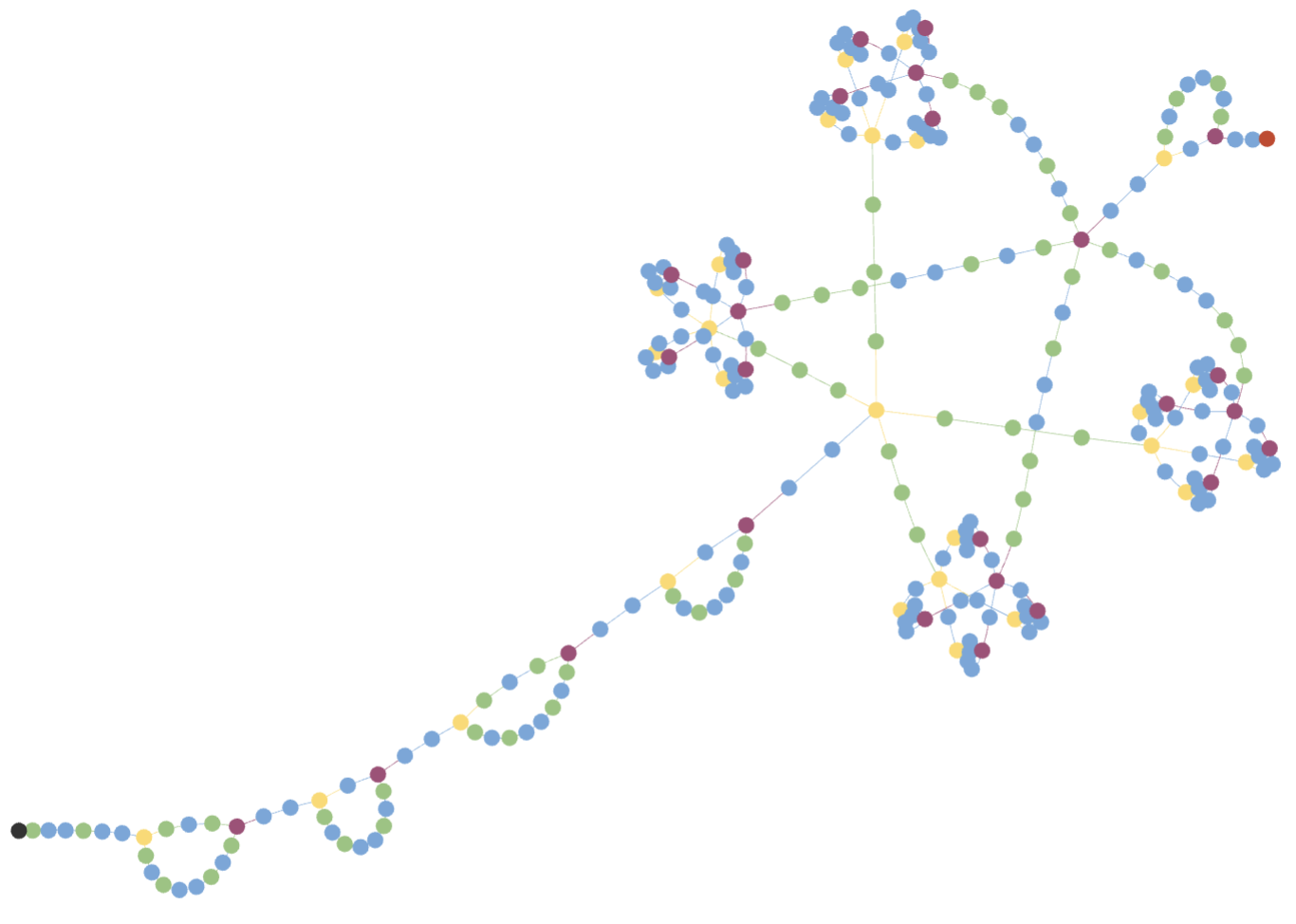

Diverse architectures can be represented in einspace, including ConvNets and transformers. Here are three state-of-the-art networks (top) and their CFG derivation trees (bottom).

Neural architecture search (NAS) finds high performing networks for a given task. Yet the results of NAS are fairly prosaic; they did not e.g. create a shift from convolutional structures to transformers. This is not least because the search spaces in NAS often aren't diverse enough to include such transformations a priori. Instead, for NAS to provide greater potential for fundamental design shifts, we need a novel expressive search space design which is built from more fundamental operations. To this end, we introduce einspace, a search space based on a parameterised probabilistic context-free grammar (CFG). Our space is versatile, supporting architectures of various sizes and complexities, while also containing diverse network operations which allow it to model convolutions, attention components and more. It contains many existing competitive architectures, and provides flexibility for discovering new ones. Using this search space, we perform experiments to find novel architectures as well as improvements on existing ones on the diverse Unseen NAS datasets. We show that competitive architectures can be obtained by searching from scratch, and we consistently find large improvements when initialising the search with strong baselines. We believe that this work is an important advancement towards a transformative NAS paradigm where search space expressivity and strategic search initialisation play key roles.

@inproceedings{ericsson2024einspace,

title={einspace: Searching for Neural Architectures from Fundamental Operations},

author={Linus Ericsson and Miguel Espinosa and Chenhongyi Yang and Antreas Antoniou and Amos Storkey and Shay B. Cohen and Steven McDonagh and Elliot J. Crowley},

year={2024},

booktitle={NeurIPS},

eprint={2405.20838},

archivePrefix={arXiv},

primaryClass={cs.LG}

}